Accessing large language models (LLMs) is commonly done through chat interfaces such as ChatGPT or Copilot (formerly Bing Chat). Even web browsers like Brave have integrated LLMs into their systems, like Leo, which can be accessed on Android devices. This method involves manually typing in a query and waiting for the system to process it.

However, another significant way to access LLMs is through a programming API. Depending on your preference, you can communicate with the model programmatically using languages like Python or C and receive a response. You can even access ChatGPT via Python using this method. However, it’s important to note that many of these APIs are not free and require payment based on usage.

In this article, I delve into the cost structure of these APIs, focusing on the concept of tokens, the difference between input and output tokens, and the most cost-effective LLMs to use via an API.

Using LLMs through APIs

Why would you want to access LLMs via an API instead of a web chat interface or a mobile app? There are numerous reasons. For instance, if you have an existing system and want to integrate LLM capabilities, an API allows you to do so. You can apply LLM technology to your existing databases or information systems by calling out from Python or C to the LLM and receiving a response. This is also beneficial for automation, which I personally use for tasks such as text analysis, summarization, translation, or content generation.

How AI APIs are priced

Calvin Wankhede / Android Authority

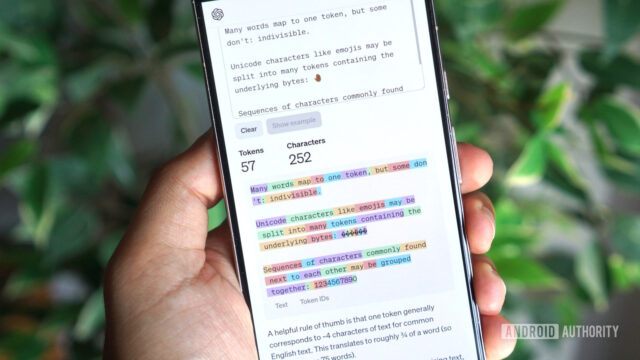

APIs are priced per token. A token is not a word but a part of a word. This is because LLMs learn from the text they are given and can identify root words and their variations. For English users, a token is about four characters or three-quarters of a word. To give you an idea, 100 tokens equate to about 75 words, one or two sentences are about 30 tokens, a paragraph is about 100 tokens, and 1500 words are about 2048 tokens.

API usage is quoted per million tokens, with two types of tokens: input tokens and output tokens. Input tokens are the prompts and any data you provide, while output tokens are responsible for the content generated by the LLM.

Input token pricing is crucial if your use case is input-heavy, such as summarizing large amounts of text or analyzing data. The number of output tokens will be large if your use case is output-heavy, such as content creation, bulk generation, summarization, or language translation.

The best affordable AI APIs

The pricing varies significantly among different LLMs. For instance, Claude 3 Haiku is the cheapest API, costing $0.25 for a million input tokens. On the other hand, ChatGPT 4 costs $30 for a million input tokens, while ChatGPT 4 Turbo charges $10 for a million input tokens.

The output token pricing also varies, but one thing remains the same — Claude 3 Haiku is still the cheapest, costing $1.25 for a million tokens. Claude 3 OPA is the priciest, demanding $75 for a million tokens.

View the table below for a complete look at the cheapest AI APIs.

| Model | Input Price per Million Tokens ($) | Output Price per Million Tokens ($) |

|---|---|---|

| Model

Claude 3 Haiku |

Input Price per Million Tokens ($)

0.25 |

Output Price per Million Tokens ($)

1.25 |

| Model

ChatGPT 3.5 |

Input Price per Million Tokens ($)

0.5 |

Output Price per Million Tokens ($)

1.5 |

| Model

Mistral Small |

Input Price per Million Tokens ($)

2 |

Output Price per Million Tokens ($)

6 |

| Model

Mistral Medium |

Input Price per Million Tokens ($)

2.7 |

Output Price per Million Tokens ($)

8.1 |

| Model

Claude 3 Sonnet |

Input Price per Million Tokens ($)

3 |

Output Price per Million Tokens ($)

15 |

| Model

Mistral Large |

Input Price per Million Tokens ($)

8 |

Output Price per Million Tokens ($)

24 |

| Model

ChatGPT 4 Turbo |

Input Price per Million Tokens ($)

10 |

Output Price per Million Tokens ($)

10 |

| Model

Claude 3 Opus |

Input Price per Million Tokens ($)

15 |

Output Price per Million Tokens ($)

75 |

| Model

ChatGPT 4 |

Input Price per Million Tokens ($)

30 |

Output Price per Million Tokens ($)

60 |

In conclusion, the choice of LLM depends not only on the pricing, but also on the functionality. You need to ensure that the LLM you choose can effectively perform your required tasks. For even more detailed info on the subject, check out the video above.