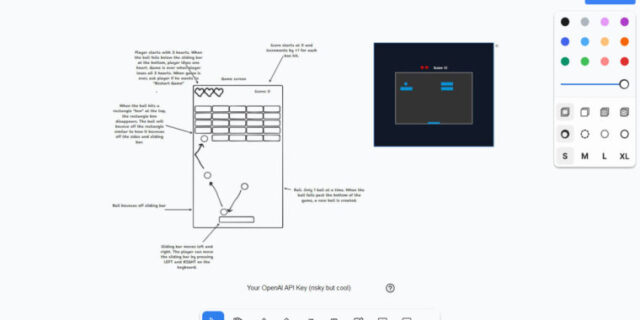

is super fun. I iterated through ~10 builds today and it cost me $0.90 using GPT4. The pong game is playable as described.”

On Wednesday, a collaborative whiteboard app maker called “tldraw” made waves online by releasing a prototype of a feature called “Make it Real” that lets users draw an image of software and bring it to life using AI. The feature uses OpenAI’s GPT-4V API to visually interpret a vector drawing into functioning Tailwind CSS and JavaScript web code that can replicate user interfaces or even create simple implementations of games like Breakout.

“I think I need to go lie down,” posted designer Kevin Cannon at the start of a viral X thread that featured the creation of functioning sliders that rotate objects on screen, an interface for changing object colors, and a working game of tic-tac-toe. Soon, others followed with demonstrations of drawing a clone of Breakout, creating a working dial clock that ticks, drawing the snake game, making a Pong game, interpreting a visual state chart, and much more.

Users can experiment with a live demo of Make It Real online. However, running it requires providing an API key from OpenAI, which is a security risk. If others intercept your API key, they could use it to rack up a very large bill in your name (OpenAI charges by the amount of data moving into and out of its API). Those technically inclined can run the code locally, but it will still require OpenAI API access.

Tldraw, developed by Steve Ruiz in London, is an open source collaborative whiteboard tool. It offers a basic infinite canvas for drawing, text, and media without requiring a login. Launched in 2021, the project received $2.7 million in seed funding and is supported by GitHub sponsors. When The GPT-4V API launched recently, Ruiz integrated a design prototype called “draw-a-ui” created by Sawyer Hood to bring the AI-powered functionality into tldraw.

GPT-4V is a version of OpenAI’s large language model that can interpret visual images and use them as prompts. As AI expert Simon Willison explains on X, Make it Real works by “generating a base64 encoded PNG of the drawn components, then passing that to GPT-4 Vision” with a system prompt and instructions to turn the image into a file using Tailwind. In fact, here is the full system prompt that tells GPT-4V how to handle the inputs and turn them into functioning code:

const systemPrompt=”You are an expert web developer who specializes in tailwind css.

A user will provide you with a low-fidelity wireframe of an application.

You will return a single html file that uses HTML, tailwind css, and JavaScript to create a high fidelity website.

Include any extra CSS and JavaScript in the html file.

If you have any images, load them from Unsplash or use solid colored rectangles.

The user will provide you with notes in blue or red text, arrows, or drawings.

The user may also include images of other websites as style references. Transfer the styles as best as you can, matching fonts / colors / layouts.

They may also provide you with the html of a previous design that they want you to iterate from.

Carry out any changes they request from you.

In the wireframe, the previous design”s html will appear as a white rectangle.

Use creative license to make the application more fleshed out.

Use JavaScript modules and unpkg to import any necessary dependencies.’

As more people experiment with GPT-4V and combine it with other frameworks, we’ll likely see more novel applications of OpenAI’s vision-parsing technology emerging in the weeks ahead. Also on Wednesday, a developer used the GPT-4V API to create a live, real-time narration of a video feed by a fake AI-generated David Attenborough voice, which we have covered separately.

For now, it feels like we’ve been given a preview of a possible future mode of software development—or interface design, at the very least—where creating a working prototype is as simple as making a visual mock-up and having an AI model do the rest. As developer Michael Dubakov wrote when showing off his own Make It Real creation, “OK, @tldraw is officially insane. It is really interesting where we end up in 5 years… I can’t keep up with innovation pace anymore.”