Getty Images / Benj Edwards

Recently, a number of viral music videos from a YouTube channel called There I Ruined It have included AI-generated voices of famous musical artists singing lyrics from surprising songs. One recent example imagines Elvis singing lyrics to Sir Mix-a-Lot’s Baby Got Back. Another features a faux Johnny Cash singing the lyrics to Aqua’s Barbie Girl.

(The original Elvis video has since been taken down from YouTube due to a copyright claim from Universal Music Group, but thanks to the magic of the Internet, you can hear it anyway.)

https://www.youtube.com/watch?v=IXcITn507Jk

An excerpt copy of the “Elvis Sings Baby Got Back” video.

Obviously, since Elvis has been dead for 46 years (and Cash for 20), neither man could have actually sung the songs themselves. That’s where AI comes in. But as we’ll see, although generative AI can be amazing, there’s still a lot of human talent and effort involved in crafting these musical mash-ups.

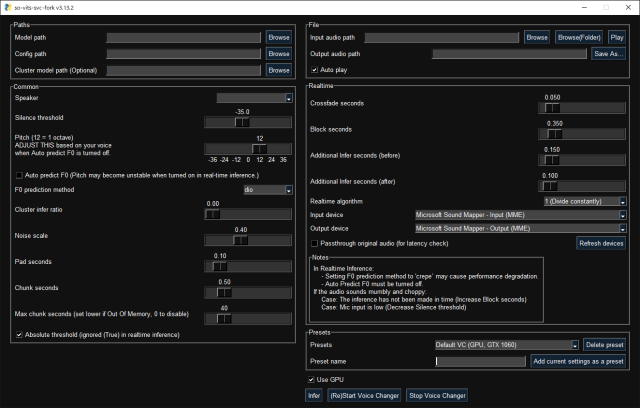

To figure out how There I Ruined It does its magic, we first reached out to the channel’s creator, musician Dustin Ballard. Ballard’s response was low in detail, but he laid out the basic workflow. He uses an AI model called so-vits-svc to transform his own vocals he records into those of other artists. “It’s currently not a very user-friendly process (and the training itself is even more difficult),” he told Ars Technica in an email, “but basically once you have the trained model (based on a large sample of clean audio references), then you can upload your own vocal track, and it replaces it with the voice that you’ve modeled. You then put that into your mix and build the song around it.”

But let’s back up a second: What does “so-vits-svc” mean? The name originates from a series of open source technologies being chained together. The “so” part comes from “SoftVC” (VC for “voice conversion”), which breaks source audio (a singer’s voice) into key parts that can be encoded and learned by a neural network. The “VITS” part is an acronym for “Variational Inference with adversarial learning for end-to-end Text-to-Speech,” coined in this 2021 paper. VITS takes knowledge of the trained vocal model and generates the converted voice output. And “SVC” means “singing voice conversion”—converting one singing voice to another—as opposed to converting someone’s speaking voice.

The recent There I Ruined It songs primarily use AI in one regard: The AI model relies on Ballard’s vocal performance, but it changes the timbre of his voice to that of someone else, similar to how Respeecher’s voice-to-voice technology can transform one actor’s performance of Darth Vader into James Earl Jones’ voice. The rest of the song comes from Ballard’s arrangement in a conventional music app.

A complicated process—at the moment

Michael van Voorst

To get more insight into the musical voice-cloning process with so-vits-svc-fork (an altered version of the original so-vits-svc), we tracked down Michael van Voorst, the creator of the Elvis voice AI model that Ballard used in his Baby Got Back video. He walked us through the steps necessary to create an AI mash-up.

“In order to create an accurate replica of a voice, you start off with creating a data set of clean vocal audio samples from the person you are building a voice model of,” said van Voorst. “The audio samples need to be of studio quality for the best results. If they are of lower quality, it will reflect back into the vocal model.”

In the case of Elvis, van Voorst used vocal tracks from the singer’s famous Aloha From Hawaii concert in 1973 as the foundational material to train the voice model. After careful manual screening, van Voorst extracted 36 minutes of high-quality audio, which he then divided into 10-second chunks for correct processing. “I listened carefully for any interference, like band or audience noise, and removed it from my data set,” he said. Also, he tried to capture a wide variety of vocal expressions: “The quality of the model improves with more and varied samples.”